Proposed Title :

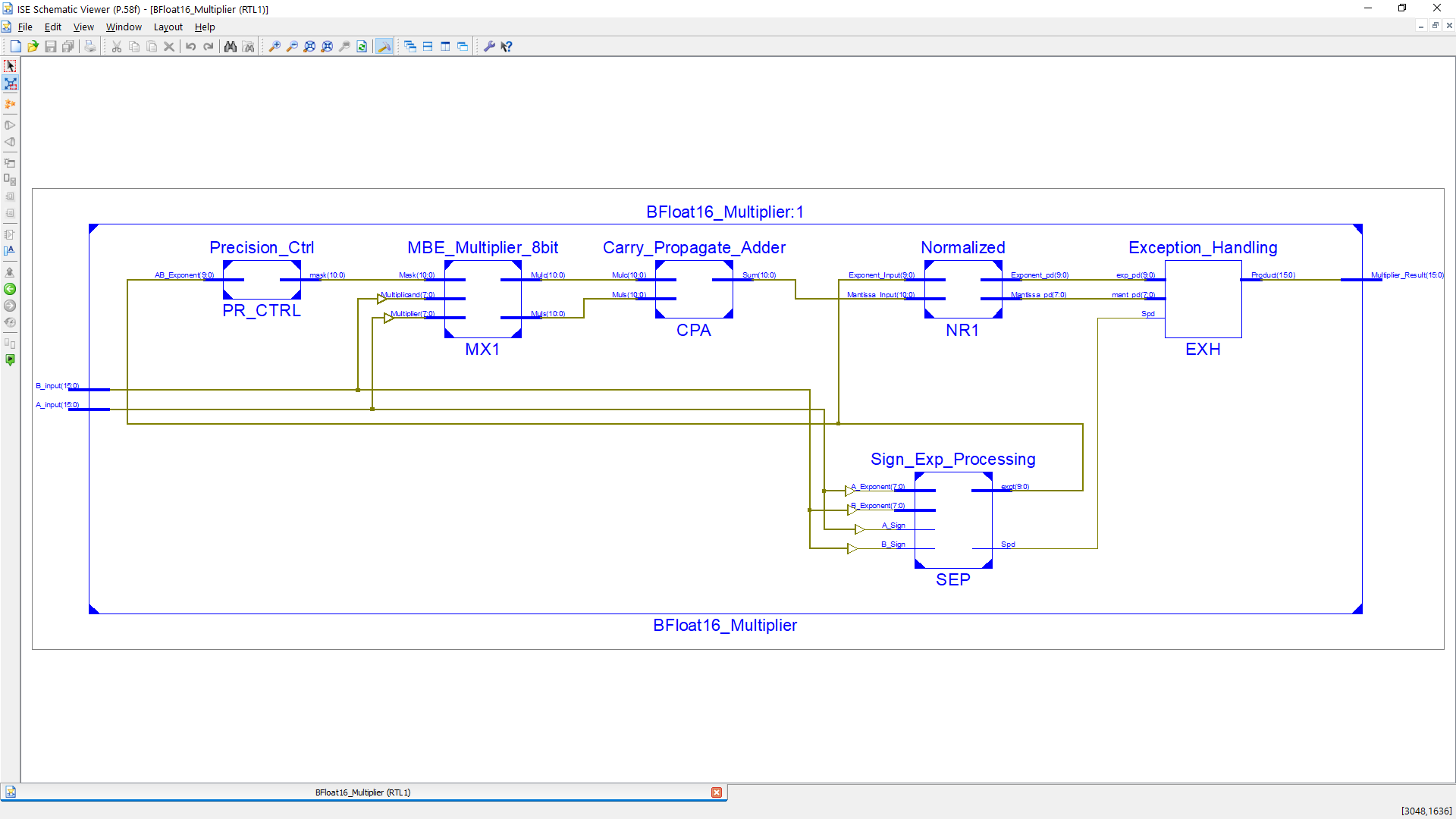

FPGA Implementation of 16-Bit Variable Precision Floating Point Multiplier using XOR-MUX Modified Booth Encoding Method with Wallace Tree Adder

Improvement of this project :

To design the 16-Bit Variable Precision Floating Point Multiplier using Area Delay Power Efficient XOR-MUX Full Adder instead of Conventional Full Adder.

To design the Reduced Hardware Resources based on Booth Multiplier and Waller Tree Adders for Mantissa Multiplications.

Software Implementation:

- Modelsim

- Xilinx

Proposed System:

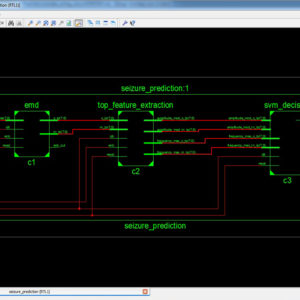

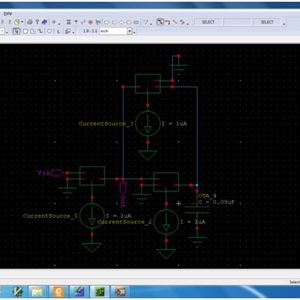

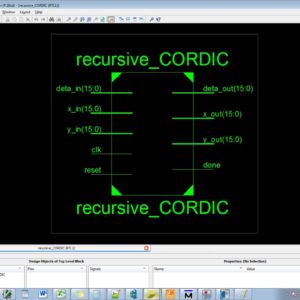

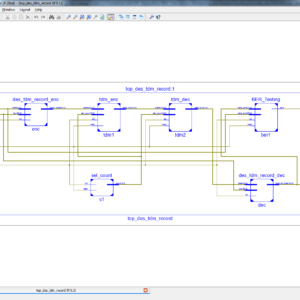

A variable-precision approximation floating-point multiplier is suggested for use in this short article with the purpose of making deep learning computations more energy efficient. The architecture that has been suggested offers support for approximation multiplication using the BFloat16 format. In deep learning models, the input and output activations often follow a normal distribution. This distribution is inspired by the posit format. For numbers with varying values, different precisions may be used to represent them. In the architecture that was presented, changing the amount of approximation is accomplished by the use of posit encoding, and the value of the product exponent is what determines the level of accuracy achieved by the calculation. In the case of a big exponent, the mantissa is subjected to a multiplication with a lower accuracy, but in the case of a small exponent, the calculation is subjected to a greater precision. The proposed design makes use of truncation as an approximation approach, with the number of bit locations that are going to be truncated being determined by the values of the product exponent. To reduce the logic size in the variable precision floating point multiplier, here proposed architecture present with area, delay and power efficient XOR-MUX Modified Booth Encoding Method with Wallace Tree Adder, in will reduce the number of logic gates in the precision floating multiplier. Finally, this work was developed in Verilog HDL, and synthesize on Xilinx Vertex-5 FPGA, and compared all the parameters in terms of area, delay and power.

” Thanks for Visit this project Pages – Buy It Soon “

Variable-Precision Approximate Floating-Point Multiplier for Efficient Deep Learning Computation

Terms & Conditions:

- Customer are advice to watch the project video file output, before the payment to test the requirement, correction will be applicable.

- After payment, if any correction in the Project is accepted, but requirement changes is applicable with updated charges based upon the requirement.

- After payment the student having doubts, correction, software error, hardware errors, coding doubts are accepted.

- Online support will not be given more than 3 times.

- On first time explanations we can provide completely with video file support, other 2 we can provide doubt clarifications only.

- If any Issue on Software license / System Error we can support and rectify that within end of the day.

- Extra Charges For duplicate bill copy. Bill must be paid in full, No part payment will be accepted.

- After payment, to must send the payment receipt to our email id.

- Powered by NXFEE INNOVATION, Pondicherry.

Payment Method :

- Pay Add to Cart Method on this Page

- Deposit Cash/Cheque on our a/c.

- Pay Google Pay/Phone Pay : +91 9789443203

- Send Cheque through courier

- Visit our office directly

- Pay using Paypal : Click here to get NXFEE-PayPal link

Bank Accounts

HDFC BANK ACCOUNT:

- NXFEE INNOVATION,

HDFC BANK, MAIN BRANCH, PONDICHERRY-605004.

INDIA,

ACC NO. 50200013195971,

IFSC CODE: HDFC0000407.